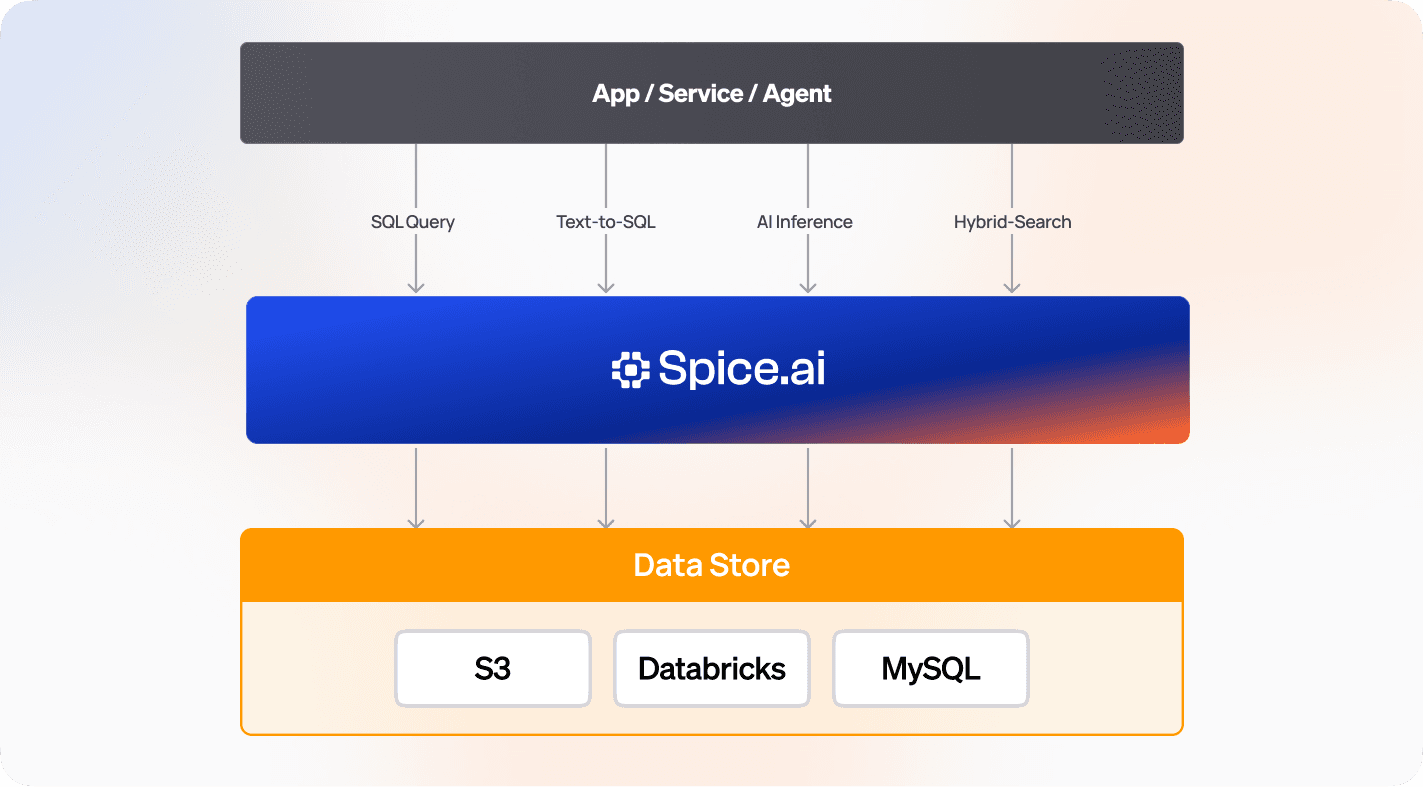

Ground AI in enterprise data

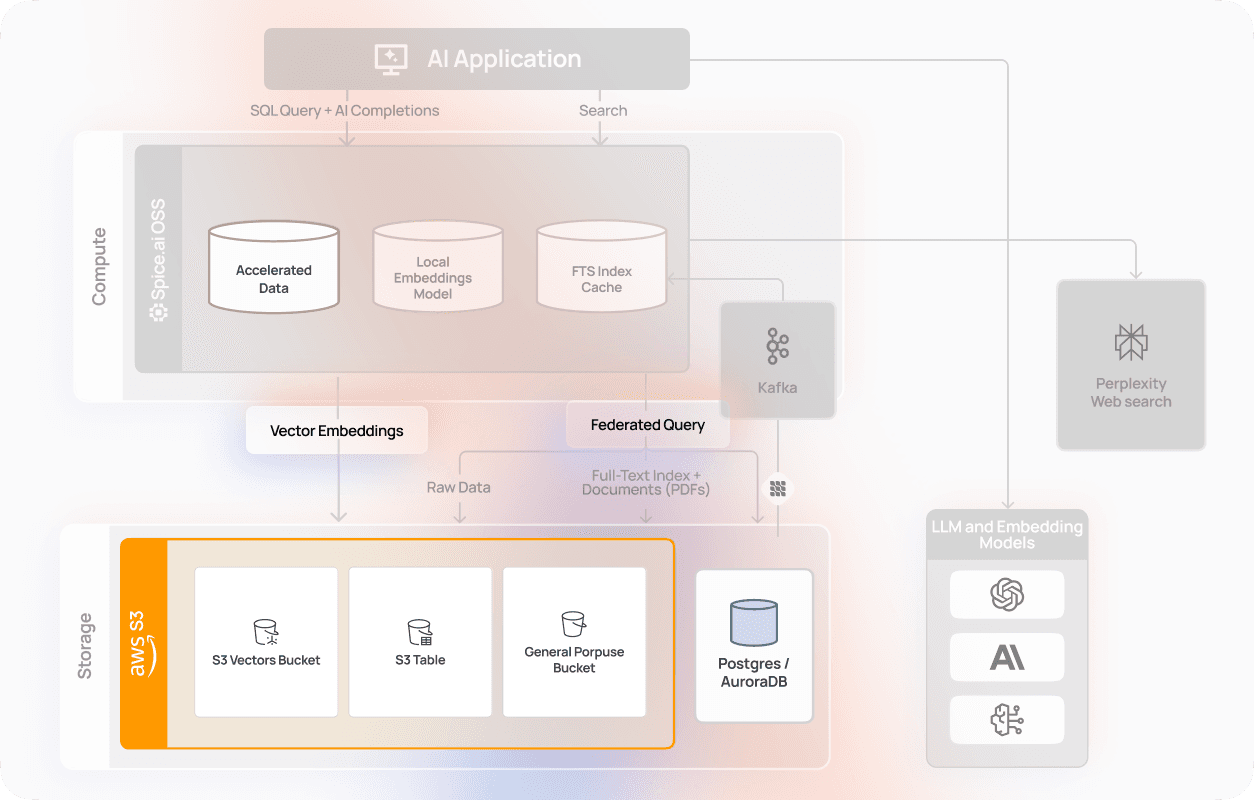

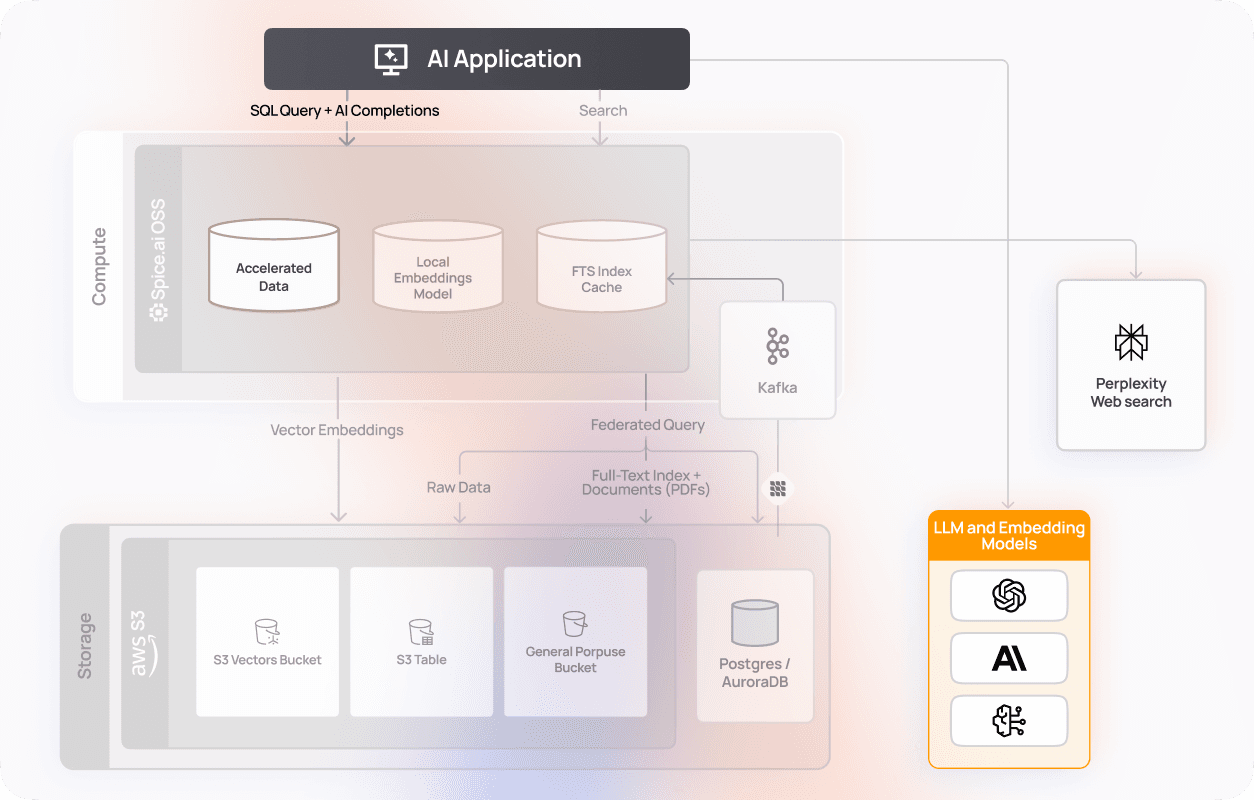

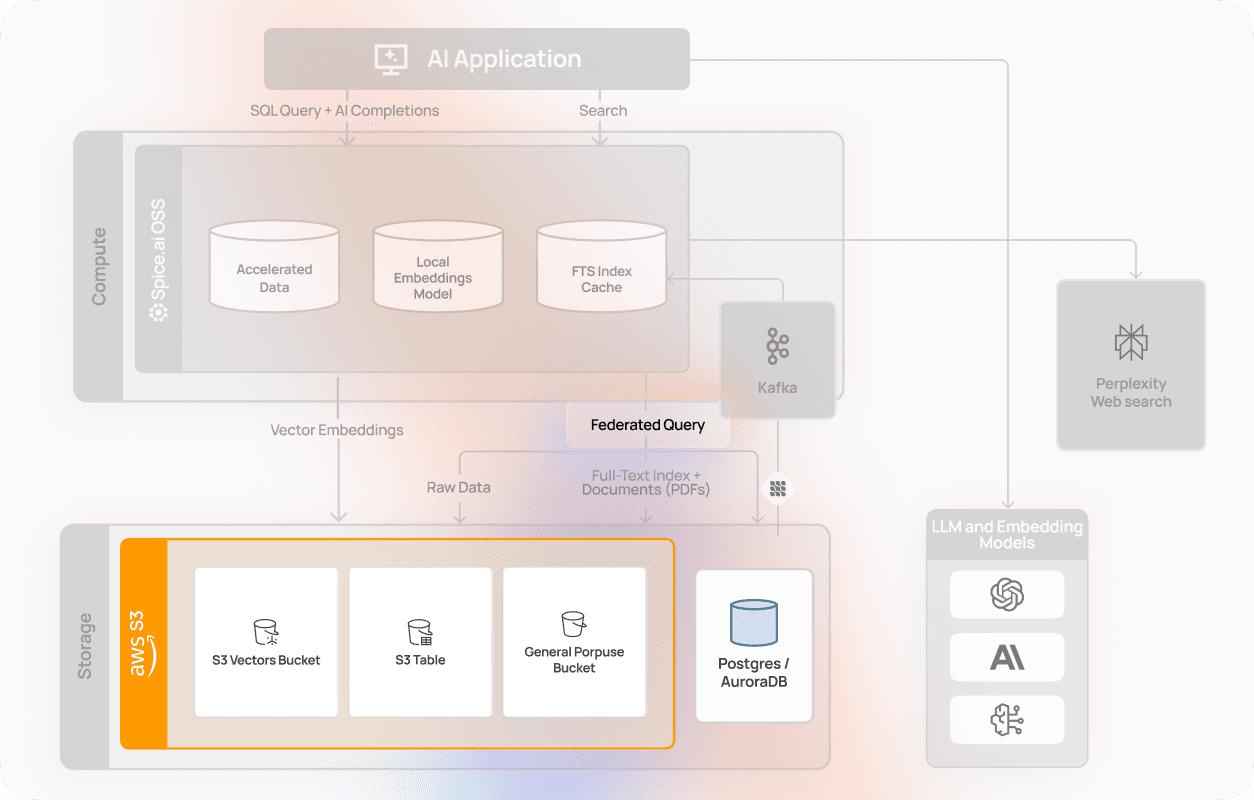

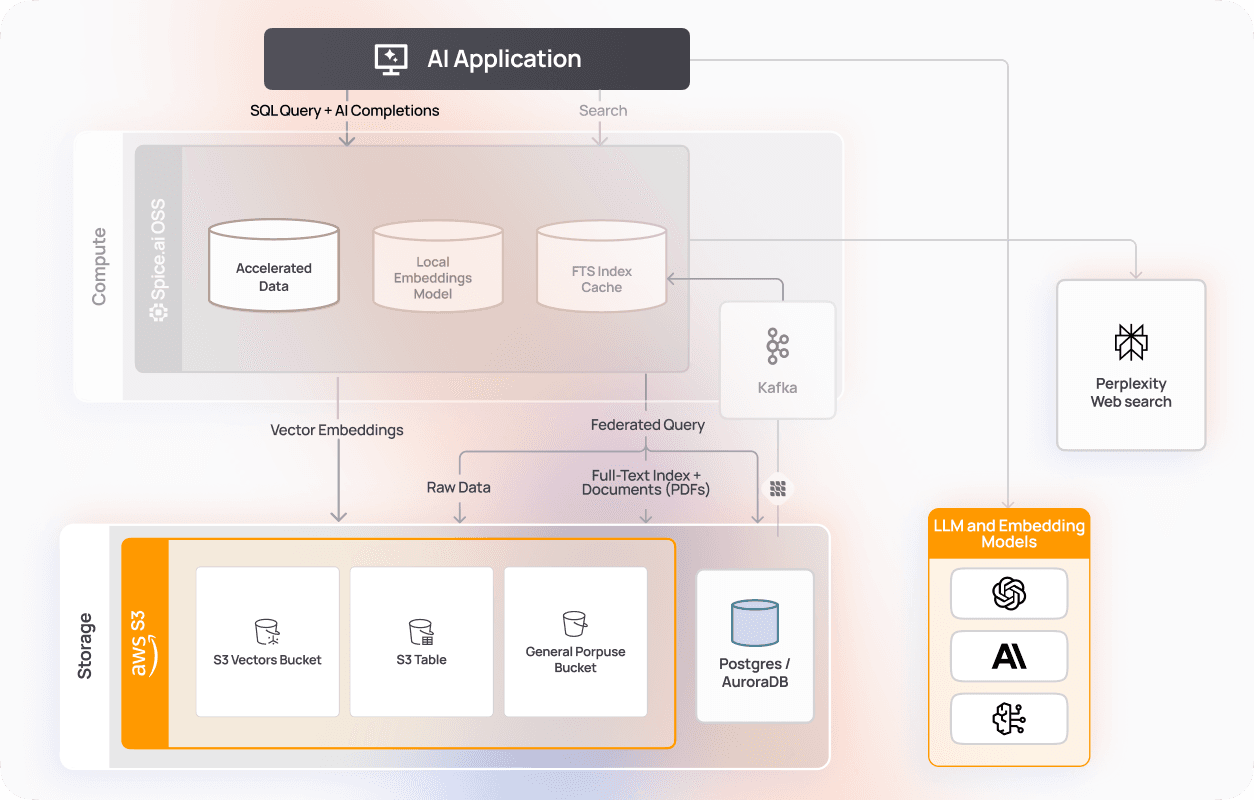

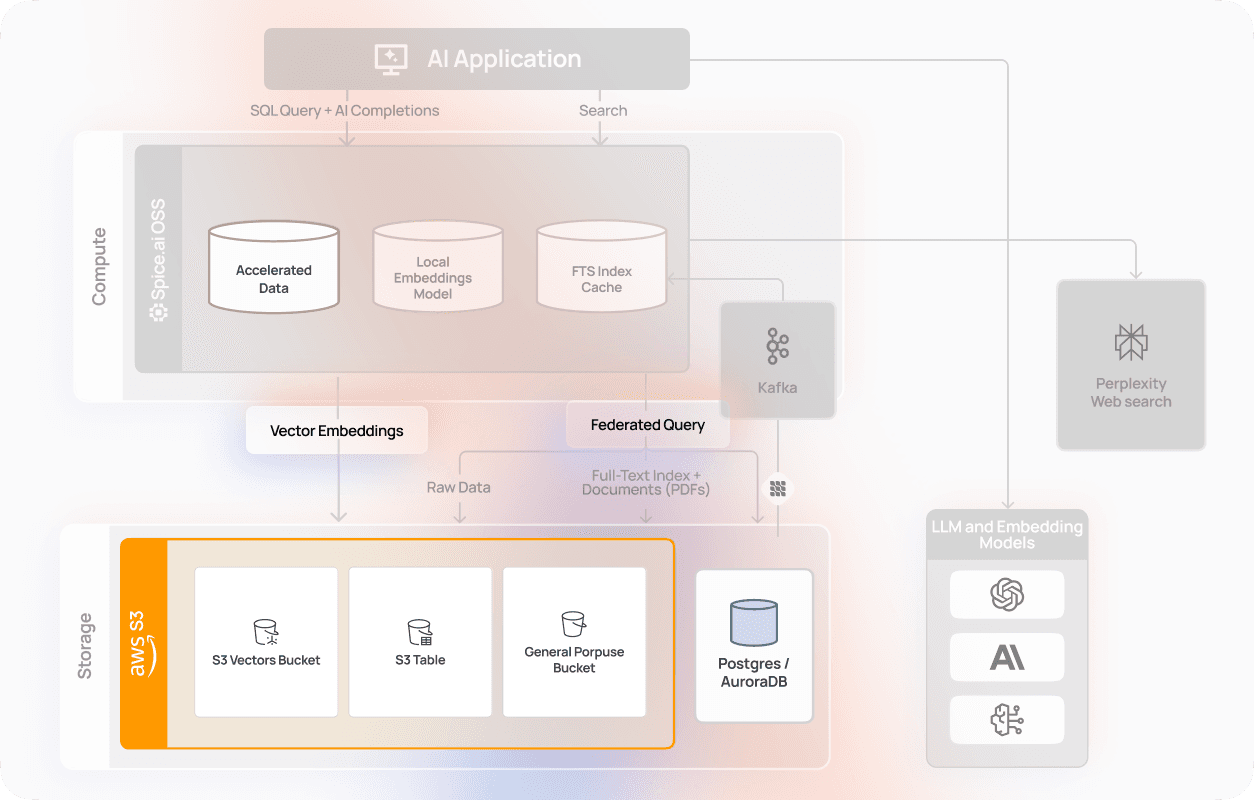

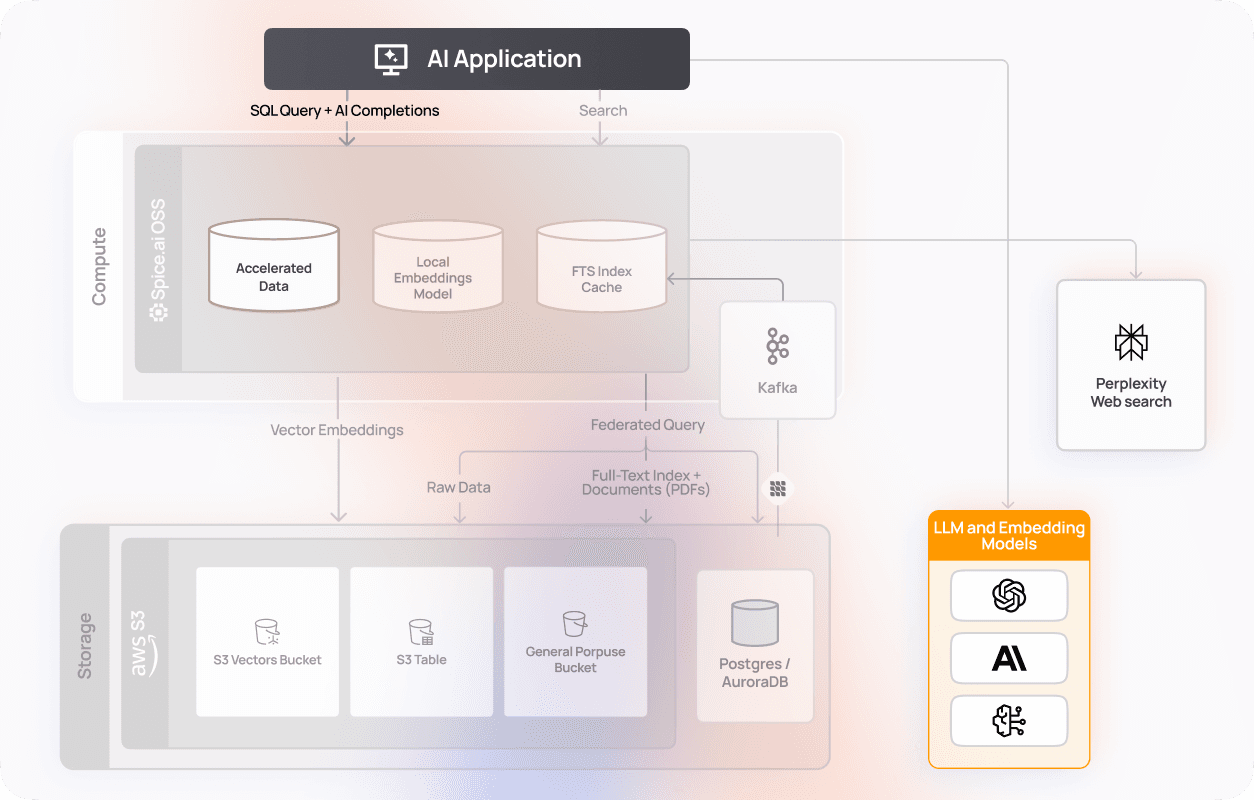

Build RAG pipelines that combine live, structured, and unstructured data with SQL and hybrid search. Retrieve, rank, and feed real-time context directly to your models.

Do more with your data

0x

up to 100x faster queries

0%

up to 80% cost savings on data lakehouse spend

0x

Increase in data reliability

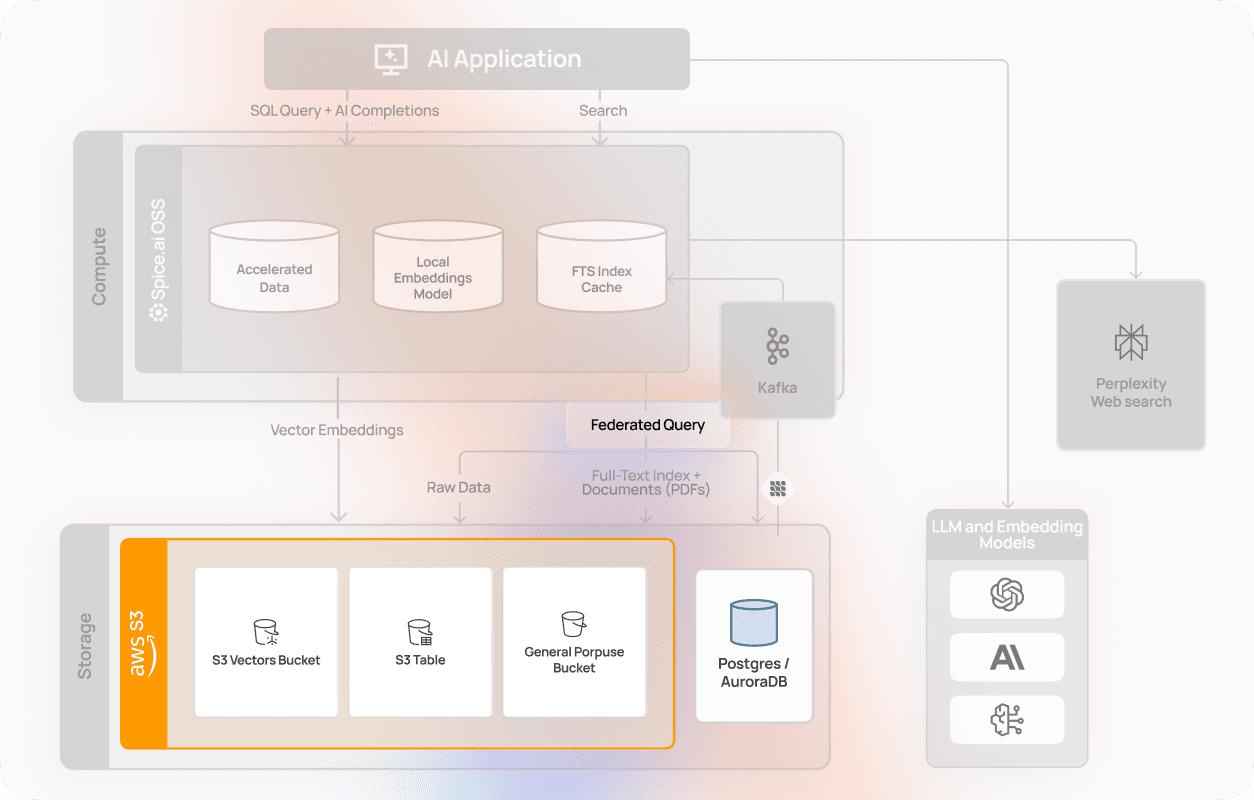

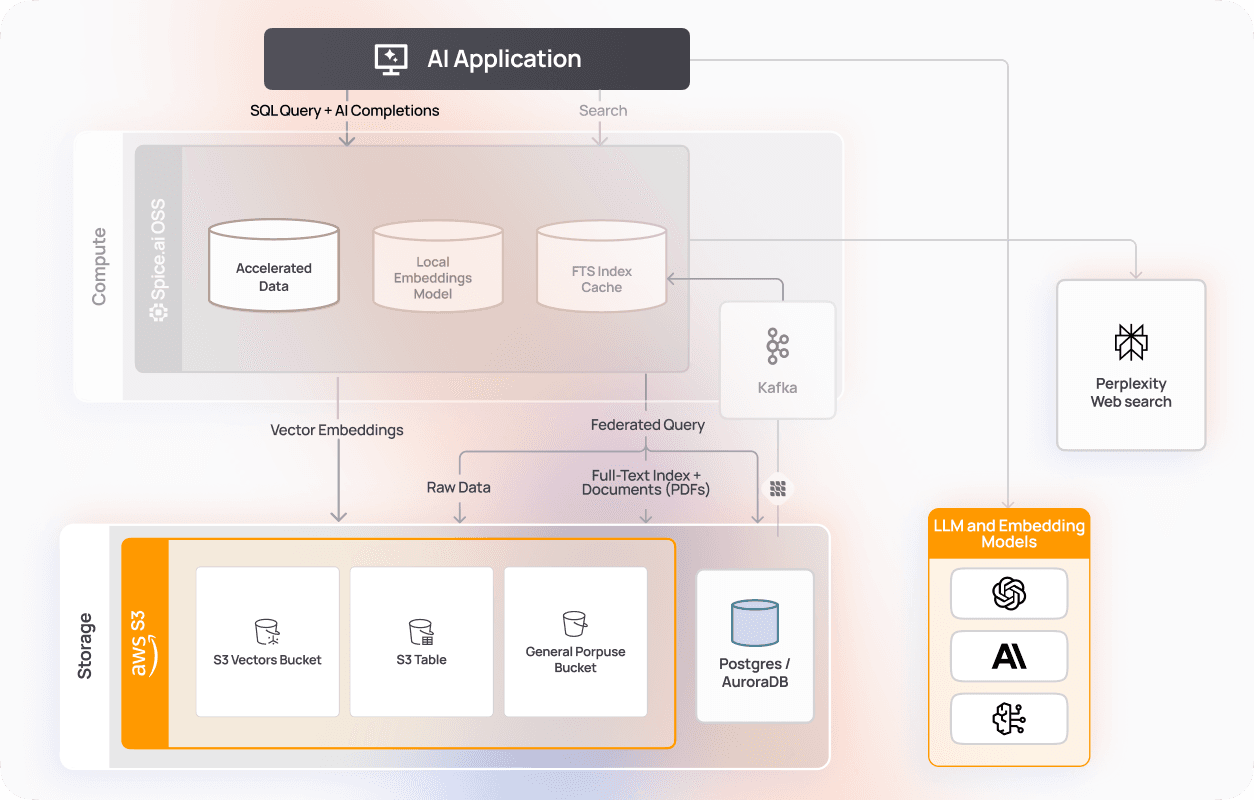

RAG breaks down without unified, real-time data

Fragmented RAG pipelines force developers to manage multiple search engines, connectors, and model APIs. Models are grounded in incomplete or outdated data, leading to hallucinations, inconsistencies, and production risks.

Build data-grounded AI faster

Deliver accurate, context-rich results by combining SQL federation, hybrid search, and LLM inference in one governed runtime.

Federate structured data

Query operational and analytical data across databases, object stores, and APIs using standard SQL. All in real time with zero ETL.

Search and embed unstructured data

Create embeddings from text, documents, or logs using local or hosted models. Run vector similarity search directly inside Spice to find relevant context, semantic matches, and related insights instantly.

Retrieve and augment intelligently

Blend structured SQL results and semantic search results in one query using hybrid search. Deliver precise, context-rich data to your LLMs without manual data engineering or pipeline maintenance.

Generate insights with the AI Gateway

Invoke and run models like OpenAI, Anthropic, or local LLMs directly in SQL queries. Feed retrieved context into the model and generate accurate, compliant, and contextual results within the same SQL workflow.

Trusted by teams building intelligent applications

Run data-intensive workloads on a high-performance engine trusted by teams building real-time systems at scale.

"Partnering with Spice AI has transformed how NRC Health delivers AI-driven insights. By unifying siloed data across systems, we accelerated AI feature development, reducing time-to-market from months to weeks - and sometimes days. With predictable costs and faster innovation, Spice isn't just solving some of our data and AI challenges - it's helping us redefine personalized healthcare."

Tim Ottersburg

VP of Technology, NRC Health

"Spice AI grounds AI in our actual data, using SQL queries across all our data. This brings accuracy to probabilistic AI systems, which are very prone to hallucinations."

Rachel Wong

CTO, Basis Set

Trusted by global enterprises

Build a scalable RAG app

Guides and examples to learn more about building RAG applications with Spice.

Hybrid Search Docs

Spice provides robust search capabilities enabling developers to query datasets beyond traditional SQL, including semantic (vector-based) search, full-text keyword search, and hybrid search methods.

True Hybrid Search: Vector, Full-Text, and SQL in One Runtime

TL;DR Show Me the (Data)! It’s well established (and maybe even trite) to say that enterprises are going all-in on artificial intelligence, with more than $40 billion directed toward generative AI projects in recent years. The initial results have been underwhelming. A recent study from the Massachusetts Institute of Technology's NANDA initiative concluded that despite the enormous allocation of […]

Real-Time Hybrid Search Using RRF: A Hands-On Guide with Spice

Surfacing relevant answers to searches across datasets has historically meant navigating significant tradeoffs. Keyword (or lexical) search is fast, cheap, and commoditized, but limited by the constraints of exact matching. Vector (or semantic) search captures nuance and intent, but can be slower, harder to debug, and expensive to run at scale. Combining both usually entails standing up multiple engines […]

See Spice in action

Get a guided walkthrough of how development teams use Spice to query, accelerate, and integrate AI for mission-critical workloads.

Get a demo