Serve real-time data and AI from object storage

Retain the scalability of object storage and the governance of open table formats. Add Spice to federate, accelerate, and power operational and AI workloads with millisecond query performance.

Do more with your data

0x

up to 100x faster queries

0%

up to 80% cost savings on data lakehouse spend

0x

increase in data reliability for critical workloads

Lakehouses weren't built for operational workloads

Traditional data lakehouses handle analytics well but lag for modern apps and AI agents that need sub-second responses and federated access. The result is slow queries, complex pipelines, and high costs when serving real-time operational data.

Turn your lakehouse into an operational data layer

Make your data lakehouse fast, federated, and AI-ready-serving live workloads at millisecond latency.

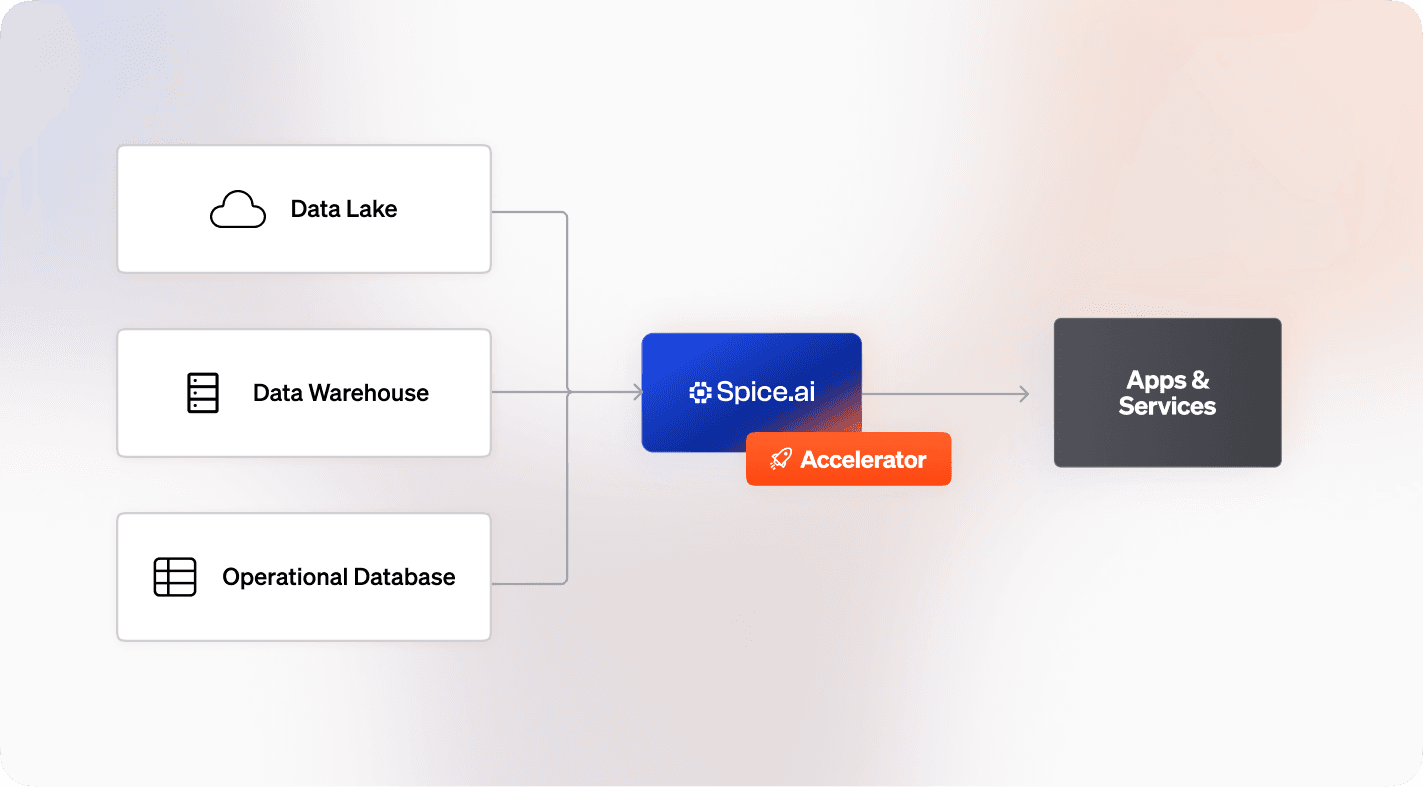

Federate across sources

Query databases, APIs, and object storage using standard SQL. Combine transactional and analytical data in a single query with zero ETL.

Accelerate object storage performance

Use data accelerators like Spice Cayenne, DuckDB, or SQLite to materialize and cache hot datasets locally. Reduce query latency from seconds to milliseconds while maintaining the scale and economics of object storage.

Serve operational and AI workloads

SQL federation, acceleration, and AI inference in one runtime means you can support disparate workloads directly from your data lakehouse, all in real time.

Native open table format support

Connect to Apache Iceberg, Delta Lake, or Parquet for schema management, ACID transactions, and optimized query planning.

Proven in production

Run data-intensive workloads on a high-performance engine trusted by teams building real-time systems at scale.

"Spice opened the door to take these critical control-plane datasets and move them next to our services in the runtime path."

Peter Janovsky

Software Architect, Twilio

0x

Faster queries

"It just spins up and works, which is really nice. The responsiveness is amazing, which is a huge gain for the customer."

Darin Douglass

Principal Software Engineer, Barracuda

"Partnering with Spice AI has transformed how NRC Health delivers AI-driven insights. By unifying siloed data across systems, we accelerated AI feature development, reducing time-to-market from months to weeks - and sometimes days. With predictable costs and faster innovation, Spice isn't just solving some of our data and AI challenges - it's helping us redefine personalized healthcare."

Tim Ottersburg

VP of Technology, NRC Health

Trusted by global enterprises

Use Spice as an operational data lakehouse

Guides and examples to learn more about building an operational data lakehouse with Spice.

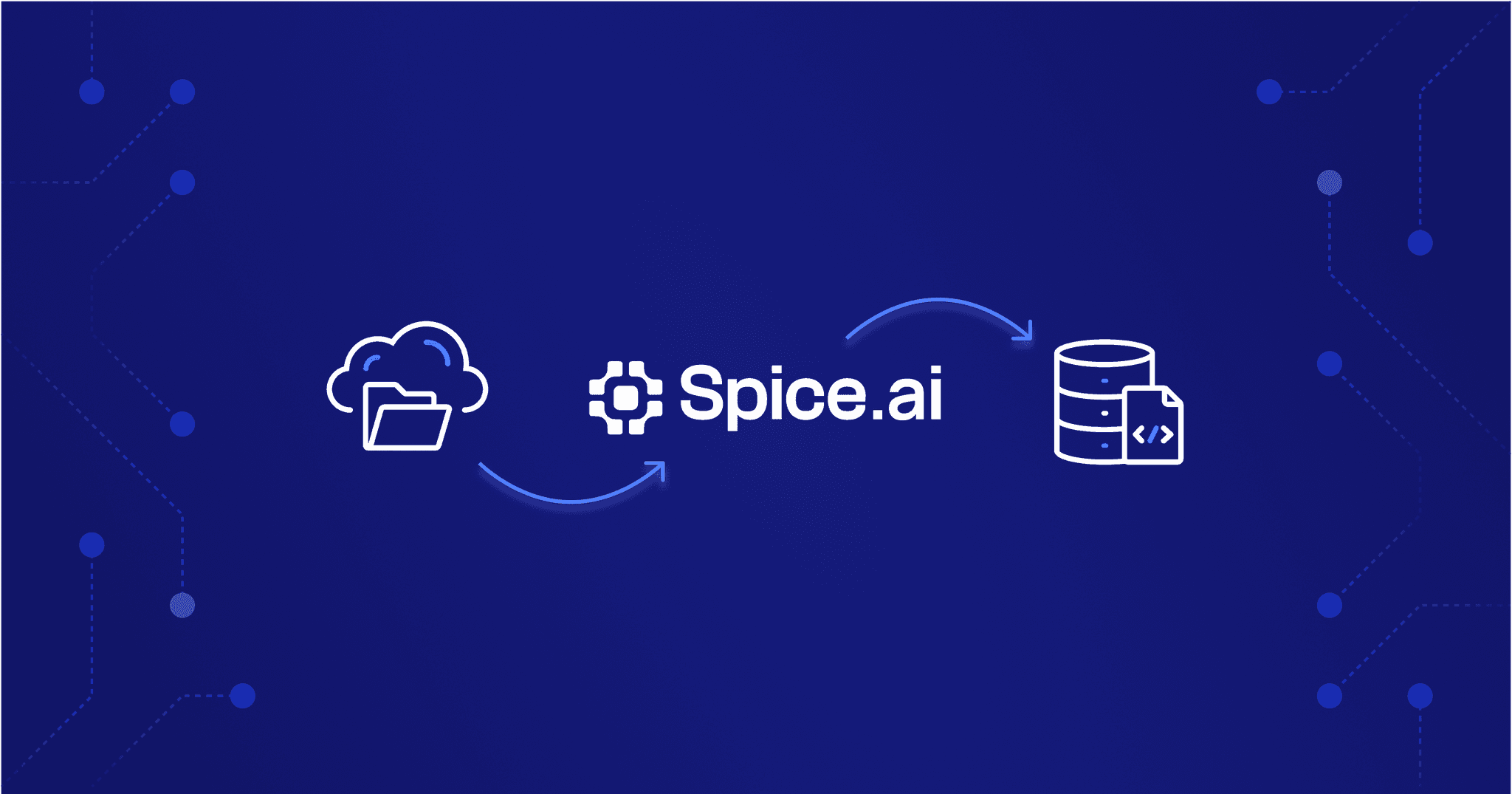

Making Object Storage Operational for Real-Time and AI Workloads

TLDR Introduction Although legacy systems and workflows remain common, many enterprises are re-evaluating their architectures to meet new demands – driven in part, but not exclusively, by AI – that require support for more data-intensive and real-time applications. The underlying storage needs for these novel workloads are generally outside the bounds of a traditional operational […]

Federated SQL Query Cookbook

Learn how to fetch combined data from S3 Parquet, PostgreSQL, and Dremio in a single query.

Data Acceleration with DuckDB

This recipe walks through how to accelerate a local copy of the taxi trips dataset stored in S3 using DuckDB as the data accelerator engine.

See Spice in action

Get a guided walkthrough of how development teams use Spice to query, accelerate, and integrate AI for mission-critical workloads.

Get a demo