The operational

data lakehouse

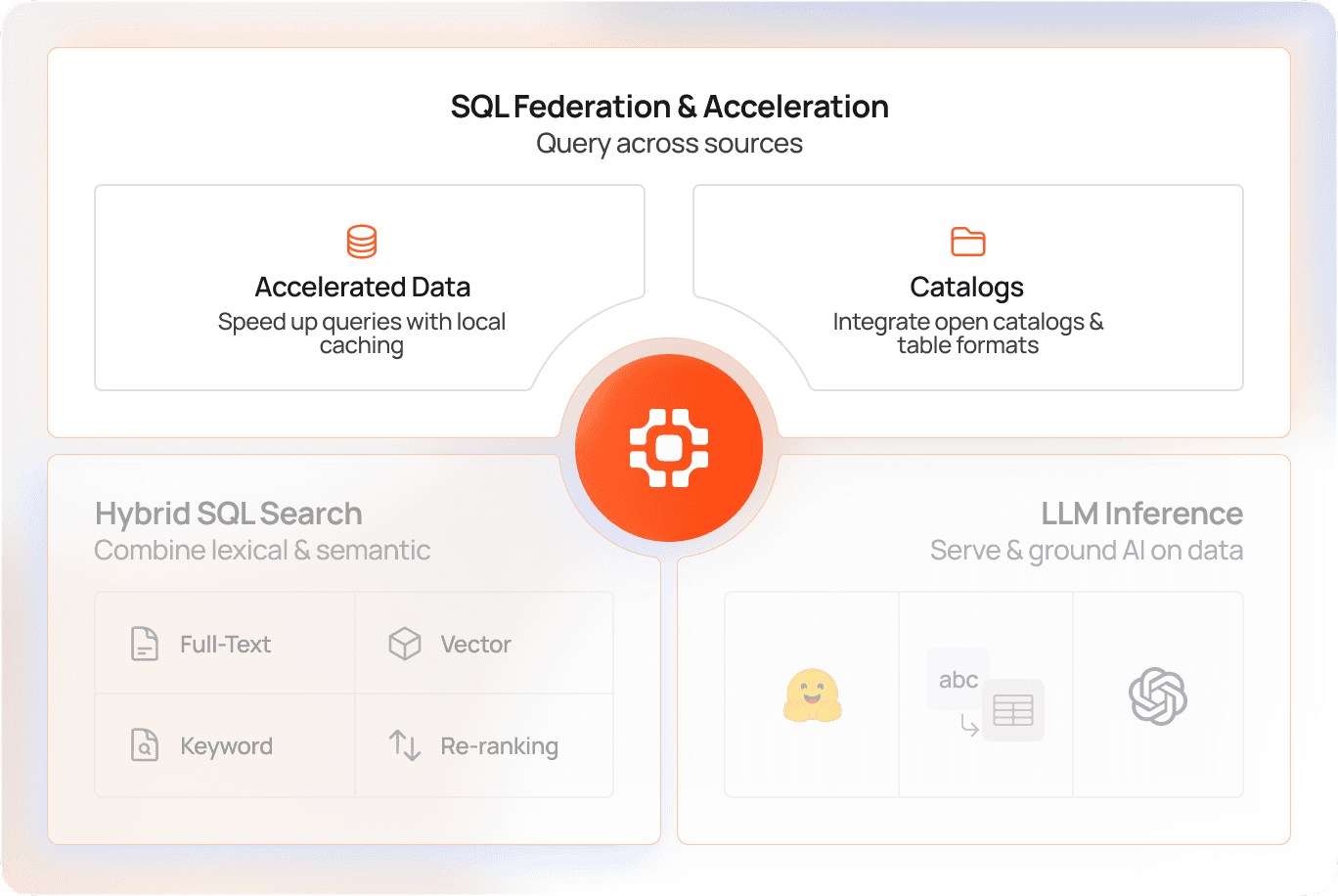

Open-source SQL federation, data acceleration, and hybrid search for data-intensive AI apps.

Deployed in production by global enterprises

Ground apps and AI agents in enterprise data

Query, accelerate, search, and integrate AI across your data estate with zero ETL.

Fast and federated access to all of your data

Connect to and query operational databases, data lakes, and warehouses across the enterprise. Materialize and accelerate working sets in-memory or on disk for millisecond access.

Learn more

Combine keyword, vector, and full-text search in SQL

Use standard SQL to power hybrid search pipelines and deliver fast and context-aware results for search-driven apps. Rank structured filters, semantic similarity, and keyword matches to optimize relevance and robustness in your search results.

Learn more

Call LLMs directly from the query layer

Call hosted or local LLMs inline using SQL UDFs or natural language. Translate text, generate summaries, classify entities, and augment query results on your enterprise data without leaving the Spice runtime.

Learn more

Do more with your data

0x

Up to 100x faster queries

0%

up to 80% cost savings on data lakehouse spend

0x

increase in data reliability for critical workloads

Proven in production

From messaging platforms to security systems, companies like Twilio and Barracuda rely on Spice to deliver low-latency apps & AI agents at scale.

Get started with Spice

Explore guides and examples that show how to query data, build apps, and integrate AI in minutes.

Spice.ai OSS Documentation

Visit the Spice open-source docs to learn how Spice works under the hood.

Spice.ai OSS Cookbook

Over 80 guides and samples to help you build data-grounded AI apps and agents with Spice.

Product Demos

Hands-on product demos to accelerate your learning with Spice.

See Spice in action

Get a guided walkthrough of how development teams use Spice to query, accelerate, and integrate AI for mission-critical workloads.

Get a demo