Spice Cloud v1.8.0: Iceberg Write Support, Acceleration Snapshots & More

Spice Cloud & Spice.ai Enterprise 1.8.0 are live! v1.8.0 includes Iceberg write support, acceleration snapshots, partitioned S3 Vector indexes, a new AI SQL function for LLM integration, and an updated Spice.js SDK.

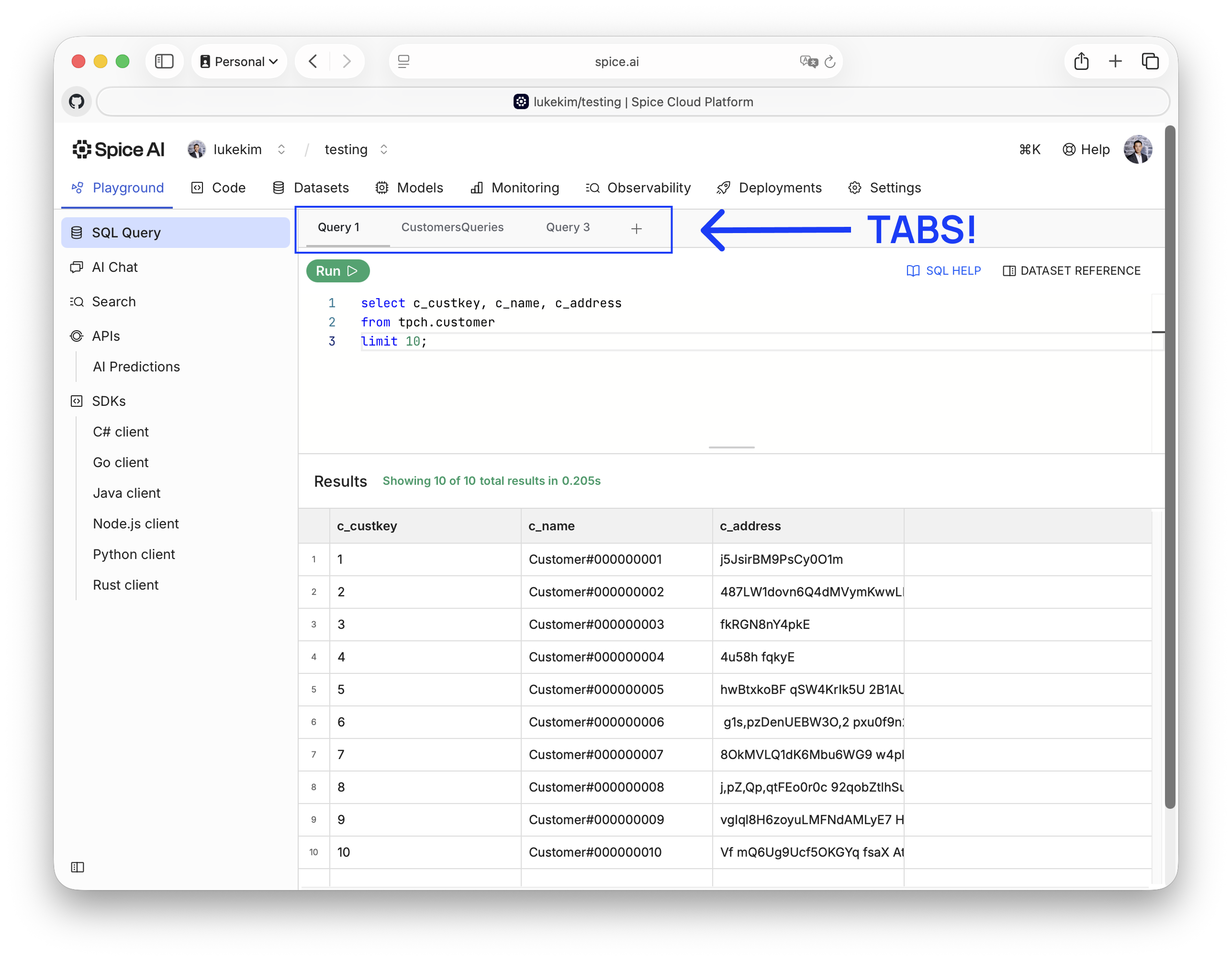

V1.8.0 also introduces developer experience upgrades, including a redesigned Spice Cloud dashboard with tabbed navigation:

Spice Cloud customers will automatically upgrade to v1.8.0 on deployment, while Spice.ai Enterprise customers can consume the Enterprise v1.8.0 image from the Spice AWS Marketplace listing.

What’s New in v1.8.0

Iceberg Write Support (Preview)

Spice now supports writing to Apache Iceberg tables using standard SQL INSERT INTO statements. This greatly simplifies creating and updating Iceberg datasets in the Spice runtime - letting teams directly manipulate open table data with SQL instead of third-party tools. Get started with Iceberg writes in Spice here.

Example query:

-- Insert from another table

INSERT INTO iceberg_table

SELECT * FROM existing_table;

-- Insert with values

INSERT INTO iceberg_table (id, name, amount)

VALUES (1, 'John', 100.0), (2, 'Jane', 200.0);

-- Insert into catalog table

INSERT INTO ice.sales.transactions

VALUES (1001, '2025-01-15', 299.99, 'completed');Acceleration Snapshots (Preview)

A new snapshotting system enables datasets accelerated with file-based engines (DuckDB or SQLite) to bootstrap from stored snapshots in object storage like S3 - significantly reducing cold-start latency and simplifying distributed deployments. Learn more.

Partitioned Amazon S3 Vector Indexes

Vector search at scale is now faster and more efficient with partitioned Amazon S3 Vector indexes - ideal for large-scale semantic search, recommendation systems, and embedding-based applications. Learn more.

AI SQL Function (Preview)

A new asynchronous ai SQL function enables developers to call large language models (LLMs) directly from SQL, making it possible to integrate LLM inference directly into federated or analytical workflows without additional services. Learn more.

Spice.js v3.0.3 SDK

v3.0.3 brings improved reliability and broader platform support. Highlights include new query methods, automatic transport fallback between gRPC and HTTP, and built-in health checks and dataset refresh controls. Learn more.

Bug & Stability Fixes

v1.8.0 also includes numerous fixes and improvements:

- Reliability: Improved logging, error handling, and network readiness checks across connectors (Iceberg, Databricks, etc.).

- Vector search durability and scale: Refined logging, stricter default limits, safeguards against index-only scans and duplicate results, and always-accessible metadata for robust queryability at scale.

- Cache behavior: Tightened cache logic for modification queries.

- Full-Text Search: FTS metadata columns now usable in projections.

- RRF Hybrid Search: Reciprocal Rank Fusion (RRF) UDTF enhancements for advanced hybrid search scenarios.

For more on v1.8.0, check out the full release notes.

v1.8 Release Community Call

Join us on Thursday, October 16th for live demos of the new functionality delivered in v1.8! Register here.

Resources to Get Started with Spice

- Sign up for Spice Cloud for free, or get started with Spice Open Source

- Explore the Spice cookbooks and docs

- Schedule a demo if you'd like to see the product live or have any questions

Explore more Spice resources

Tutorials, docs, and blog posts to help you go deeper with Spice.

A Developer’s Guide to Understanding Spice.ai

This guide helps developers build a mental model of why, how, and where to use Spice.

Spice Cloud v1.11: Spice Cayenne Reaches Beta, Apache DataFusion v51, DynamoDB Streams Improvements, & More

Spice Cloud v1.11 focuses on what matters most in production: faster queries, lower memory usage, and predictable performance across acceleration and caching.

Real-Time Control Plane Acceleration with DynamoDB Streams

How to sync DynamoDB data to thousands of nodes with sub-second latency using a two-tier architecture with DynamoDB Streams and Spice acceleration.

See Spice in action

Get a guided walkthrough of how development teams use Spice to query, accelerate, and integrate AI for mission-critical workloads.

Get a demo