The Operational

Data Lakehouse

Deploy Data Apps & AI Agents at Scale

Intelligent applications demand real-time access to both operational and analytical data. Traditional lakehouses were built for batch analytics, not for latency-sensitive apps and AI. Spice is the platform that powers these modern workloads.

Spice eliminates the complexity of data pipelines and fragmented infrastructure. With one runtime, enterprises can deliver low-latency apps and agentic AI experiences on top of existing data systems.

Enterprise Data & AI Platform

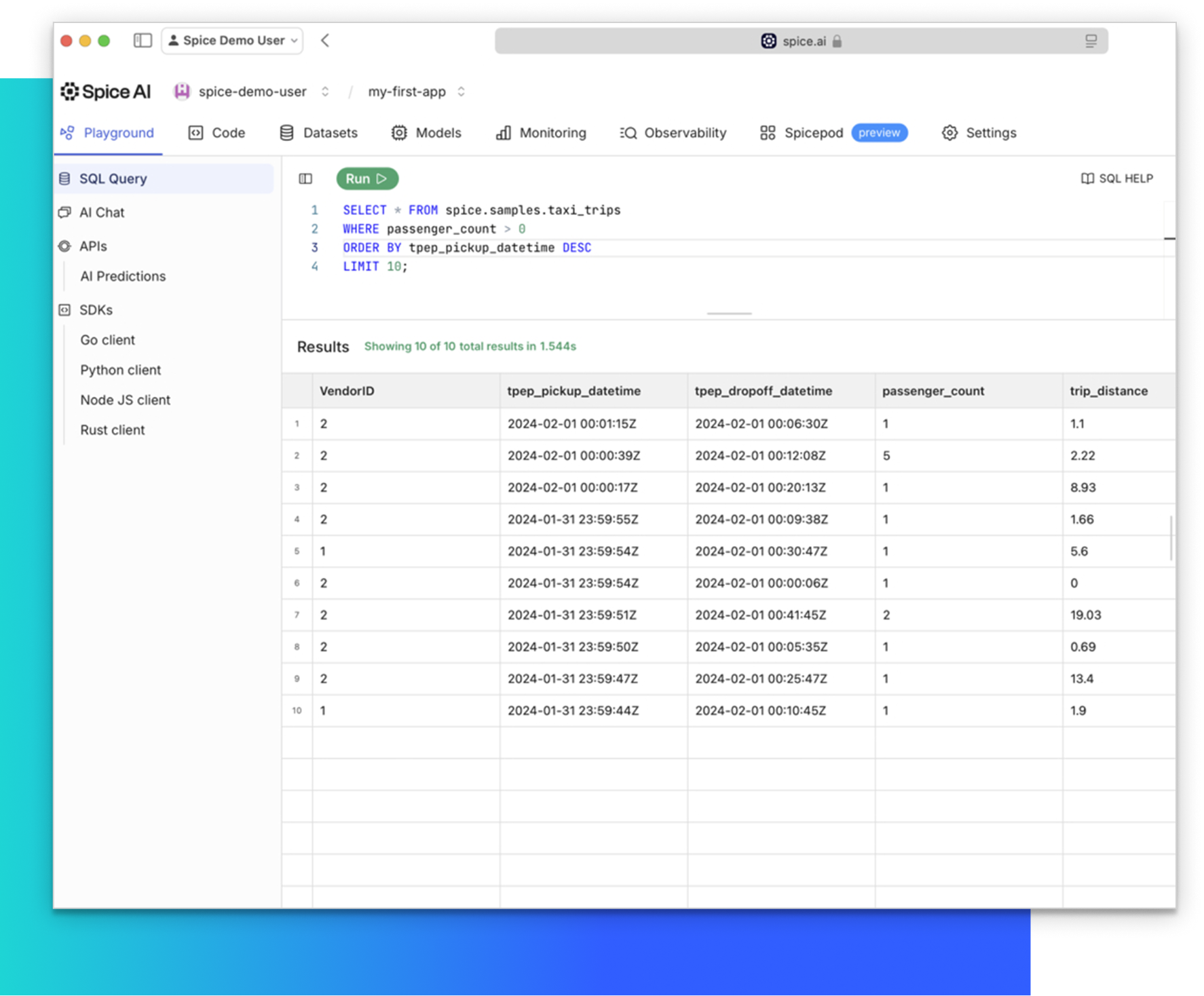

Spice packages the primitives critical to intelligent apps in one portable runtime: query federation and acceleration, hybrid search, and LLM inference.

Federate across OLTP, OLAP, and streaming sources in-place

Accelerate large datasets locally with DuckDB & Arrow

Run vector, keyword, and full-text search in one SQL statement

Build hybrid retrieval pipelines without extra infrastructure

Serve local or hosted LLMs from OpenAI, Anthropic, NVIDIA, and more in the Spice runtime

Combine inference with federated data and search to power latency-sensitive apps

Provide LLMs with memory persistence tools to store and retrieve information across conversations

Managed DuckDB instances for data storage and analytics

Connect Spice to Snowflake, ElasticSearch, MongoDB, and Postgres to access and query data wherever it lives.

Ingest data into private cloud data warehouses to cross-query with other data

Baremetal ZK & ML GPU clusters

Managed Kubernetes and 24/7 livesite operations

Xilinx FPGAs (Coming soon)

Cloud-scale, high-availability JSON RPC nodes capable of 1,000+ RPS

Web3 data and smart-contract indexing

Comprehensive Ethereum Beacon chain node and data support for stakers and restakers

Security-First

Turn AI into Business Outcomes

Flexible Deployments, from

On-Prem to Cloud

Spice.ai Intelligent Application Flywheel